Improving Access to Portuguese Tax Authority Debtors Data

3 min read

|

Mar 11 2024

Improving Access to Portuguese Tax Authority Debtors Data

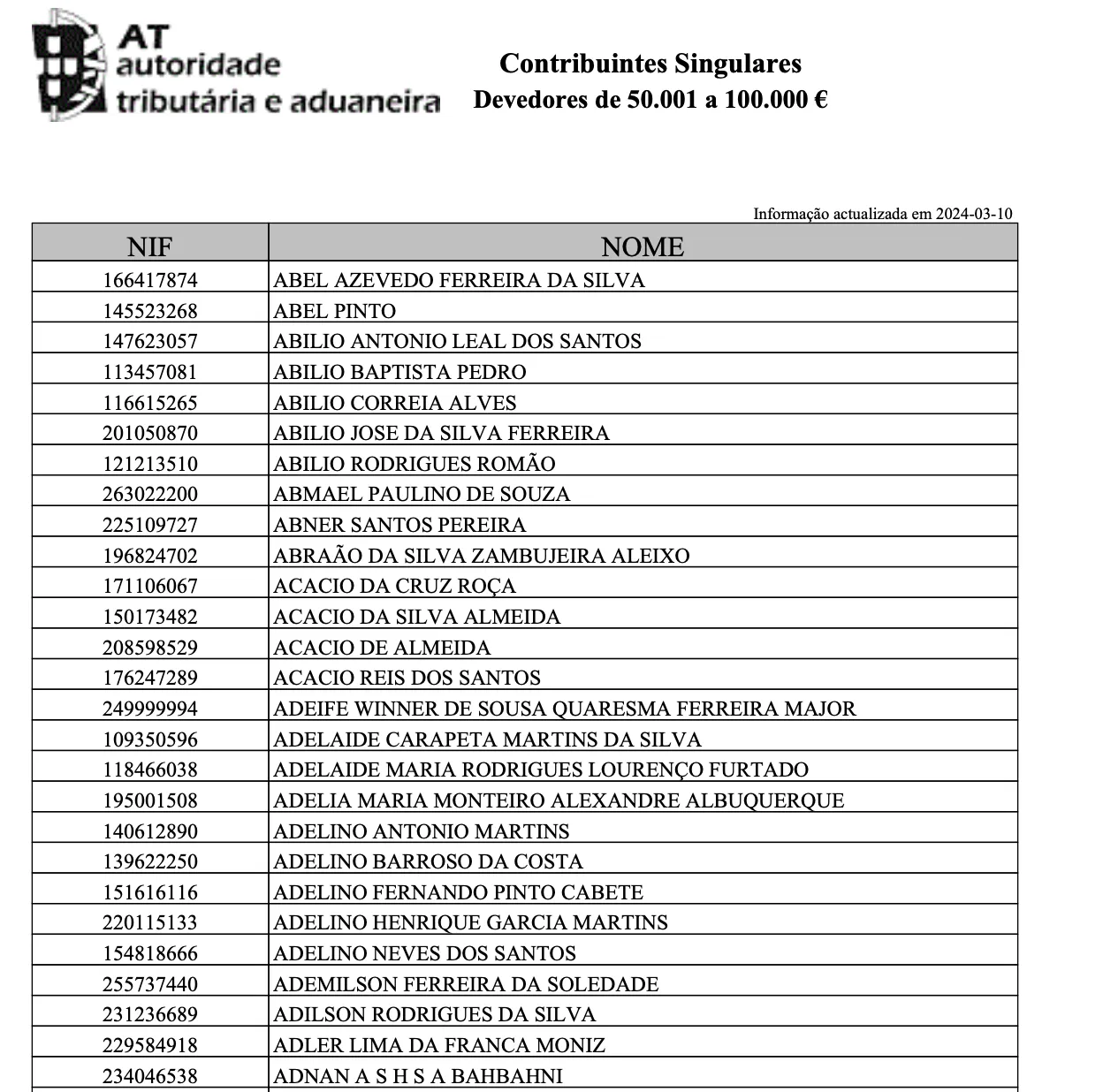

Navigating government portals, like the Portuguese Tax Authority, can often be a daunting task, especially when data is stored in inconvenient formats. One such challenge is the Portuguese Tax Authority storing debtors’ data in PDF files, making it difficult for individuals and companies to easily find the information they need.

The Problem

The Portuguese Tax Authority’s keeps track of both individuals and companies that hold a large enough debt. This information is stored in multiple PDFs in their website and it seems to be updated daily. Each PDF basically holds a huge table in it, something like this:

The lack of a user-friendly interface makes it time-consuming and tedious for individuals and businesses to identify debtors.

The solution: Debtors scraper and interactive table

To address this issue, I created 2 tools:

- a debtors scraper (a python-based scraper)

- a frontend react app, with a table with filtering, search and export functionality.

The live tool can be seen here.

The scraper: How it works

This automated tool downloads and extracts data from the PDF files provided by the Portuguese Tax Authority, converting it into a more accessible JSON format. The scraper runs daily on GitHub Actions, ensuring that the debtors’ data is regularly updated and readily available. It follows a simple workflow:

- PDF Parsing: The scraper utilizes Python, pypdf and tabula to parse information from the PDF files obtained from the Portuguese Tax Authority’s portal.

- Data Transformation: Extracted data is then transformed into JSON format, making it more readable and versatile.

- Daily Updates: Leveraging GitHub Actions, the scraper runs daily to fetch the latest debtors’ data, ensuring the information is always current. You can check this workflow here.

The ouptut of this scraper is basically a json file that’s used as an API endpoint. Yes, I’m using a github repository as a backend service, because it works (and it’s free).

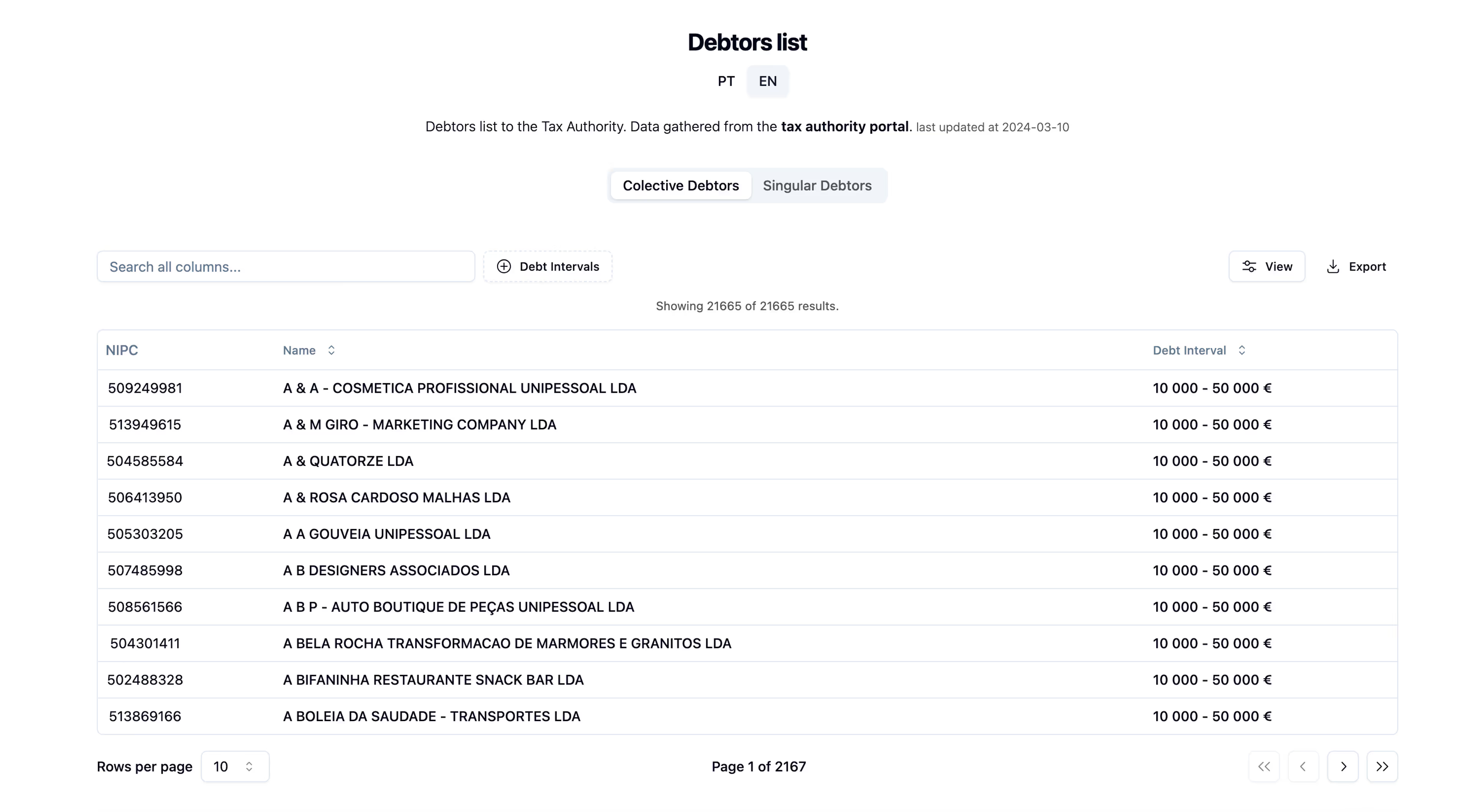

The frontend: Making data accessible

Realizing the importance of providing a user-friendly interface for the JSON data, I developed a frontend web application using React, React Table and shadcn ui.

The frontend reads from the main JSON file, debtors.json, and displays the data in an interactive table that allows users to easily search, filter, and download the entire dataset, providing a seamless experience for those navigating the debtors’ information.

It’s currently running on cloudflare pages, for free.

Conclusion

With the scraper and its accompanying frontend, I aimed to simplify access to the Portuguese Tax Authority’s debtors’ data. By automating the extraction process and presenting the information in a more user-friendly manner, individuals and businesses can now navigate the dataset effortlessly.

Oh, this is all running for free, in case you haven’t notice.

Links

- the scraper code: https://github.com/franciscobmacedo/debtors-scraper

- the frontend code: https://github.com/franciscobmacedo/debtors

- the live app: https://debtors.fmacedo.com/